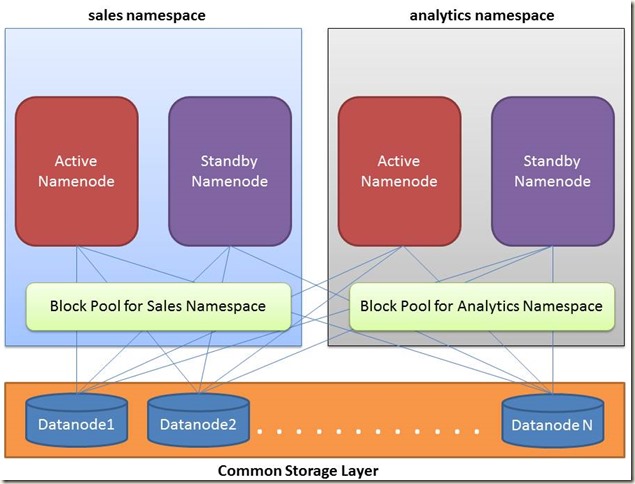

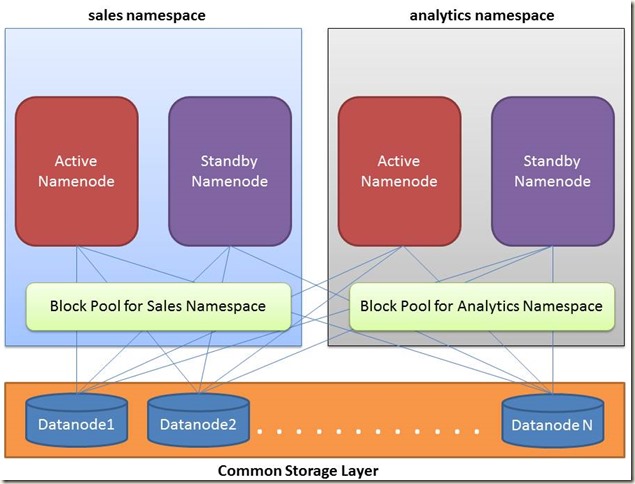

Requirement: We have to configure federated namenodes for our new Hadoop cluster. We need two federated namenodes each for our departments sales and analytics. In my last post http://pe-kay.blogspot.in/2016/02/change-single-namenode-setup-to.html I had written about federated namenode setup, you can refere that also. The HDFS federated cluster that we are creating is of high priority and users will be completely dependent on our cluster, so we can not afford to have downtime. So we have to enable HA for our federated namenodes. So there will be total 4 namenodes, two namenodes (1 active and 1 standby) for sales namespace and two for analytics.

For demonstration of this requirement, I am going to use 4 virtual box with Ubuntu 14.04 VMs. The VMs are named as server1, server2, server3 and server4.

There are two ways we can configure HDFS High Availability, using Using the Quorum Journal Manager or using Shared Storage. Using shared storage in HA design, there is always a risk of failure of the shared storage. As I am going to use more latest version of hadoop (i.e. 2.6), so I will use Quorum Journal Manager which provides additional level of HA by having a group of JournalNodes. When any namespace modification is performed by the Active node, it durably logs a record of the modification to a majority of these JNs. So if we configure multiple journalnodes, then we can afford jornalnode failures also. For details you can read offical hadoop documents.

For configuring HA with automatic failover we also need Zookeeper. For details of Zookeeper, you can visit the link https://zookeeper.apache.org/

1) server1 : Will run active/standby namenode for namespace sales

2) server2: Will run active/standby namenode for namespace analytics

3) server3: Will run active/standby namenode for namespace sales

4) server4: Will run active/standby namenode for namespace analytics

Datanodes:

For this demo, I will run datanodes on all the four VMs.

Journal Nodes:

Journal nodes will run on three VMs server1, server2 and server3

Zookeeper:

Jookeeper will run on three VMs server2, server3 and server4

server.1=server2:2222:2223

server.2=server3:2222:2223

server.3=server4:2222:2223

Create directory for storing Zookeeper data and log files:

hadoop@server2:~$ mkdir /home/hadoop/hdfs_data/zookeeper

hadoop@server3:~$ mkdir /home/hadoop/hdfs_data/zookeeper

hadoop@server4:~$ mkdir /home/hadoop/hdfs_data/zookeeper

Create Zookeeper ID files:

hadoop@server2:~$ echo 1 > /home/hadoop/hdfs_data/zookeeper/myid

hadoop@server3:~$ echo 2 > /home/hadoop/hdfs_data/zookeeper/myid

hadoop@server4:~$ echo 3 > /home/hadoop/hdfs_data/zookeeper/myid

Start Zookeeper cluster

hadoop@server2:~$ zkServer.sh start

hadoop@server3:~$ zkServer.sh start

hadoop@server4:~$ zkServer.sh start

We are going to use ViewFS and define which paths map to which namenode. For details about ViewFS you may visit this https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/ViewFs.html link. Now the clients will load the ViewFS plugin and look for mount table information in the configuration file.

<property>

<name>fs.defaultFS</name>

<value>viewfs:///</value>

</property>

Here we are mapping a folder to a namespace

Note: In last blog post on HDFS federation http://pe-kay.blogspot.in/2016/02/change-single-namenode-setup-to.html, I mapped the path to a namenode’s URL, but as we are now into a HA configuration so I have to set the mapping to the respective nameservice.

<property>

<name>fs.viewfs.mounttable.default.link./sales</name>

<value>hdfs://sales</value>

</property>

<property>

<name>fs.viewfs.mounttable.default.link./analytics</name>

<value>hdfs://analytics</value>

</property>

We have to give a directory name in JournalNode machines where the edits and other local state used by the JournalNodes will be stored. Create this directory in all the nodes where the journalnodes will run.

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/hdfs_data/journalnode</value>

</property>

hdfs-site.xml

Note: I have included only those configurations which are necessary to configure federated HA cluster.

<!-- Below properties are added for NameNode Federation and HA -->

<!-- Nameservices for our two federated namespaces sales and analytics -->

<property>

<name>dfs.nameservices</name>

<value>sales,analytics</value>

</property>

In each nameservice we will define 2 namenodes, one will be active namenode and the other one will be standby namenode.

<!-- Unique identifiers for each NameNodes in the sales nameservice -->

<property>

<name>dfs.ha.namenodes.sales</name>

<value>sales-nn1,sales-nn2</value>

</property>

<!-- Unique identifiers for each NameNodes in the analytics nameservice -->

<property>

<name>dfs.ha.namenodes.analytics</name>

<value>analytics-nn1,analytics-nn2</value>

</property>

<!-- RPC address for each NameNode of sales namespace to listen on -->

<property>

<name>dfs.namenode.rpc-address.sales.sales-nn1</name>

<value>server1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.sales.sales-nn2</name>

<value>server3:8020</value>

</property>

<!-- RPC address for each NameNode of analytics namespace to listen on -->

<property>

<name>dfs.namenode.rpc-address.analytics.analytics-nn1</name>

<value>server2:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.analytics.analytics-nn2</name>

<value>server4:8020</value>

</property>

<!-- HTTP address for each NameNode of sales namespace to listen on -->

<property>

<name>dfs.namenode.http-address.sales.sales-nn1</name>

<value>server1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.sales.sales-nn2</name>

<value>server3:50070</value>

</property>

<!-- HTTP address for each NameNode of analytics namespace to listen on -->

<property>

<name>dfs.namenode.http-address.analytics.analytics-nn1</name>

<value>server2:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.analytics.analytics-nn2</name>

<value>server4:50070</value>

</property>

A single set of JournalNodes can provide storage for multiple federated namesystems. So I will configure the same set of JournalNodes running on server1, server2 and server3 for both the nameservices sales and analytics.

<!-- Addresses of the JournalNodes which provide the shared edits storage, written to by the Active nameNode and read by the Standby NameNode –>

Shared edit storage in JournalNodes for sales namespace.

<property>

<name>dfs.namenode.shared.edits.dir.sales</name>

<value>qjournal://server1:8485;server2:8485;server3:8485/sales</value>

</property>

Shared edit storage in JournalNodes for analytics namespace.

<property>

<name>dfs.namenode.shared.edits.dir.analytics</name>

<value>qjournal://server1:8485;server2:8485;server3:8485/analytics</value>

</property>

<!-- Configuring automatic failover -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>server2:2181,server3:2181,server4:2181</value>

</property>

<!-- Fencing method that will be used to fence the Active NameNode during a failover -->

<!-- sshfence: SSH to the Active NameNode and kill the process -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<!-- Configure the name of the Java class which will be used by the DFS Client to determine which NameNode is the current Active -->

<property>

<name>dfs.client.failover.proxy.provider.sales</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.analytics</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

For more about the configuration properties, you can visit https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/HDFSHighAvailabilityWithQJM.html

hadoop@server1:~/.ssh$ hdfs zkfc –formatZK

For creating required znode of analytics namespace, run the below command from one of the Namenodes of analytics nameservice

hadoop@server2:~/.ssh$ hdfs zkfc –formatZK

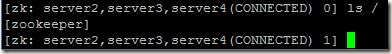

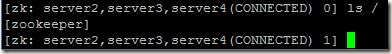

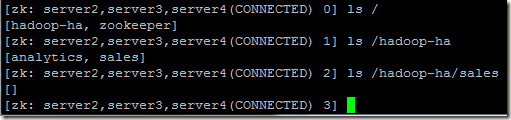

Zookeeper znode tree before running above commands:

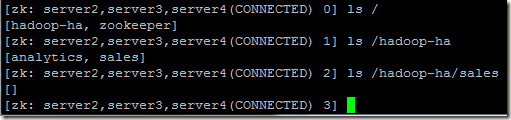

Zookeeper znode tree after running above commands:

hadoop@server2:~$ hadoop-daemon.sh start journalnode

hadoop@server3:~$ hadoop-daemon.sh start journalnode

Formating the namenodes of sales nameservice:We have to run the format command (hdfs namenode -format) on one of NameNodes of sales nameservice. Our sales nameservice will be on server1 and server3. I am running the format command in server1.

hadoop@server1:~$ hdfs namenode -format -clusterID myCluster

One of the namenodes (in server1) of sales nameservice has been formated, so we should now copy over the contents of the NameNode metadata directories to the other, unformatted NameNode server3.

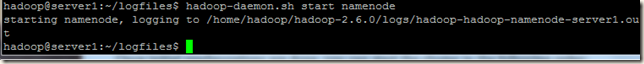

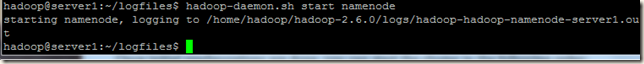

Start the namenode in server1 and run the hdfs namenode –bootstrapStandby command in server3.

hadoop@server1:~$ hadoop-daemon.sh start namenode

hadoop@server3:~$ hdfs namenode –bootstrapStandby

Start the namenode in server3:

hadoop@server3:~$ hadoop-daemon.sh start namenode

Formating the namenodes of analytics nameservice:We have to run the format command (hdfs namenode -format) on one of NameNodes of analytics nameservice. Our analytics nameservice will be on server2 and server4. I am running the format command in server2.

hadoop@server2:~$ hdfs namenode -format -clusterID myCluster

One of the namenodes (in server2) of analytics nameservice has been formated, so we should now copy over the contents of the NameNode metadata directories to the other, unformatted NameNode server4.

Start the namenode in server2 and run the hdfs namenode –bootstrapStandby command in server4.

hadoop@server2:~$ hadoop-daemon.sh start namenode

hadoop@server4:~$ hdfs namenode –bootstrapStandby

Start the namenode in server4:

hadoop@server4:~$ hadoop-daemon.sh start namenode

Start the ZKFailoverController process (zkfs, it is a ZooKeeper client which also monitors and manages the state of the NameNode.) in all the VMs where the namenodes are running.

hadoop@server1:~$ hadoop-daemon.sh start zkfc

hadoop@server2:~$ hadoop-daemon.sh start zkfc

hadoop@server3:~$ hadoop-daemon.sh start zkfc

hadoop@server4:~$ hadoop-daemon.sh start zkfc

Start DataNodes

hadoop@server1:~$ hadoop-daemon.sh start datanode

hadoop@server2:~$ hadoop-daemon.sh start datanode

hadoop@server3:~$ hadoop-daemon.sh start datanode

hadoop@server4:~$ hadoop-daemon.sh start datanode

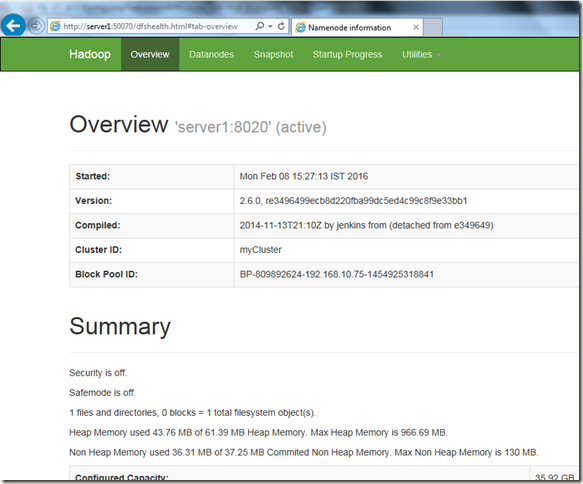

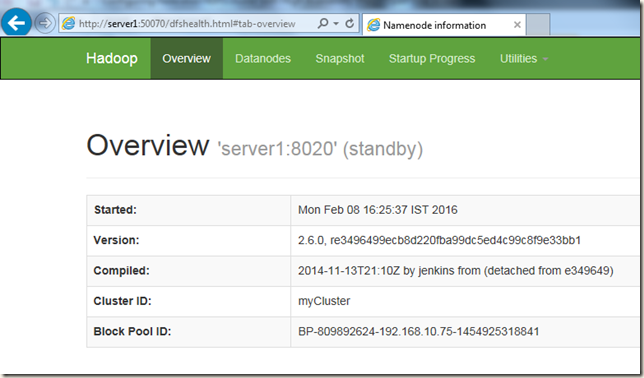

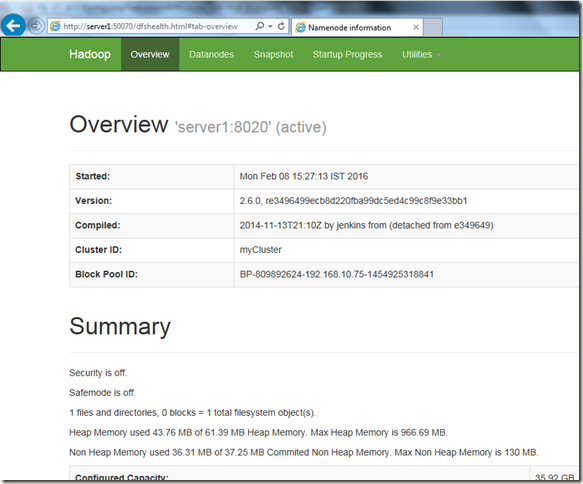

Lets check the namenodes of sales namespace

Open the URLs in web-browser http://server1:50070 and http://server3:50070

We can see that the namenode in server1 is active right now.

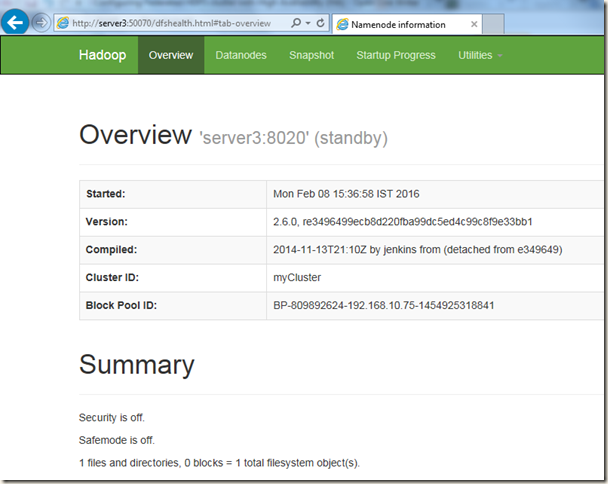

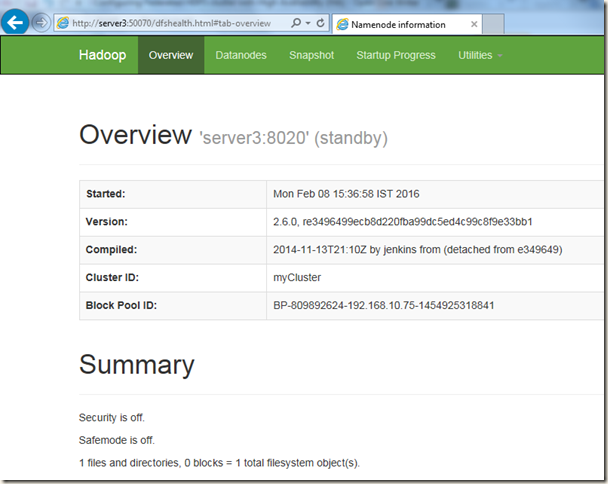

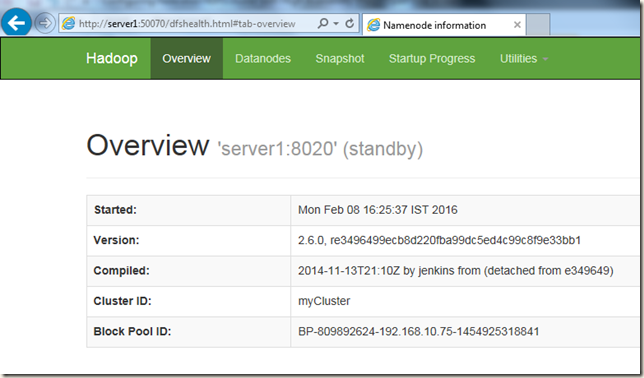

Namenode in server3 is standby

analytics namespace:

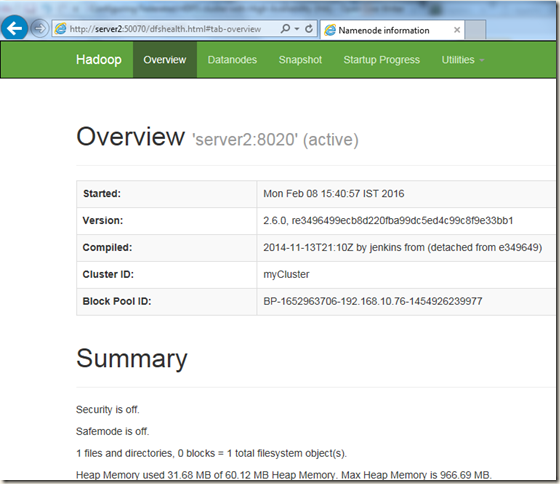

Open the URLs in web-browser http://server2:50070 and http://server4:50070

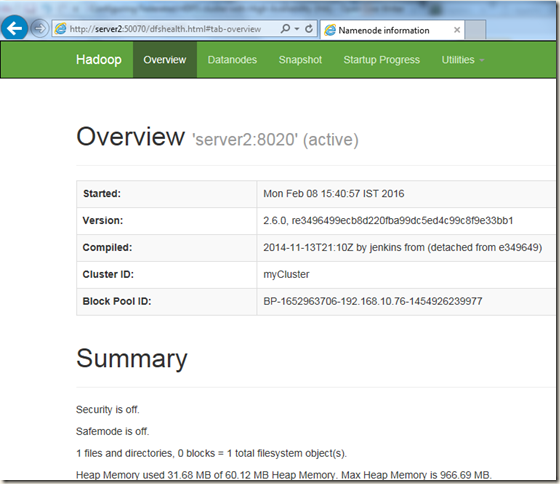

server2 is active now

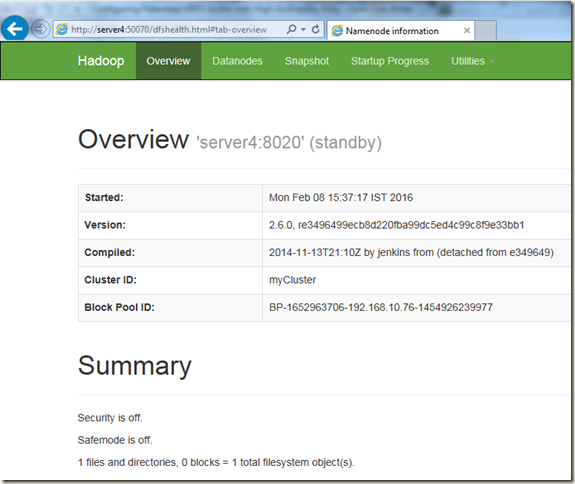

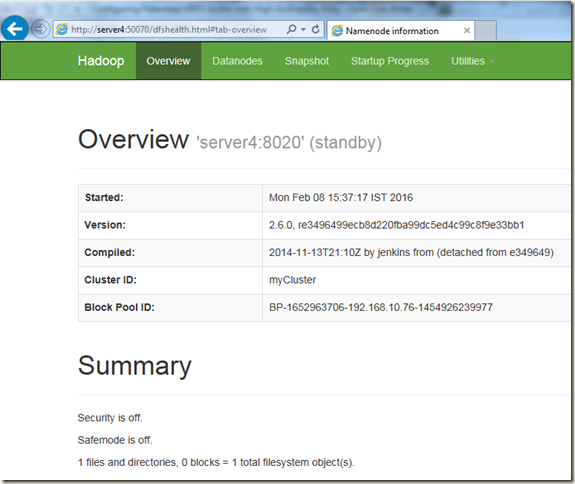

server4 is standby

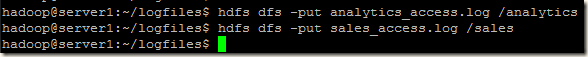

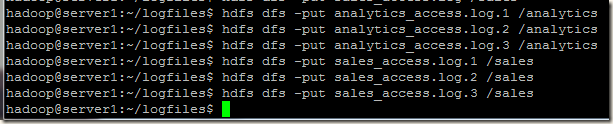

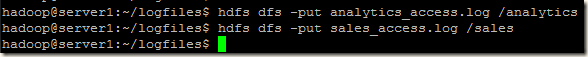

Now I will copy two files into our two namespace folders /sales and /analytics and will check if they are in correct namenode:

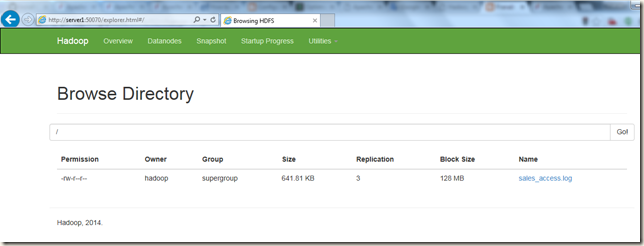

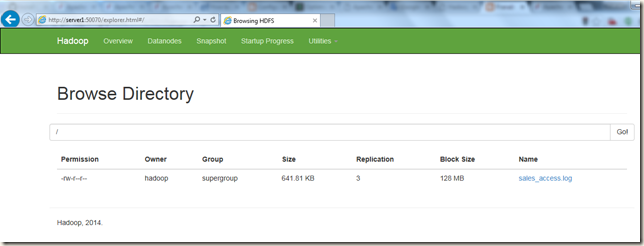

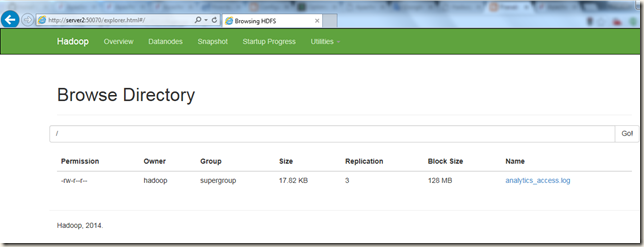

Checking in server1 (active namenode for /sales nameservice), we can see that namenode running on server1 has files only related to sales namespace.

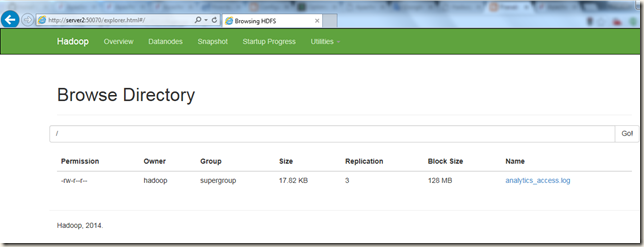

Similarly, server2 (active namenode for /analytics nameservice), we can see that namenode running on server2 has files only related to analytics namespace.

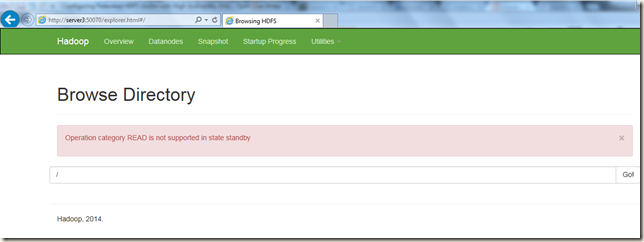

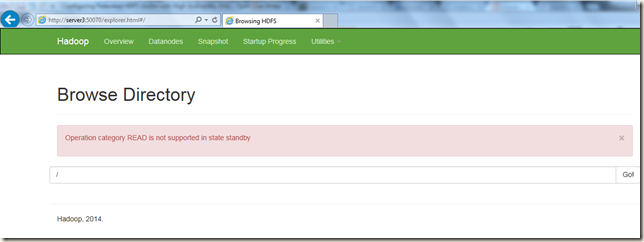

If we try to read from a standby namenode, we will get error. In the below screenshot I tried to read from server3 (standby namenode for /sales nameservice) and got error.

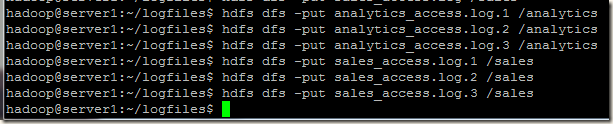

Lets put few more files and check if the automatic failover is working:

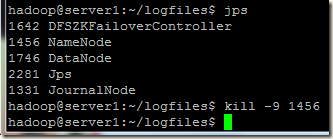

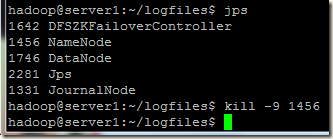

I am killing the active namenode of /sales namespace running on server1

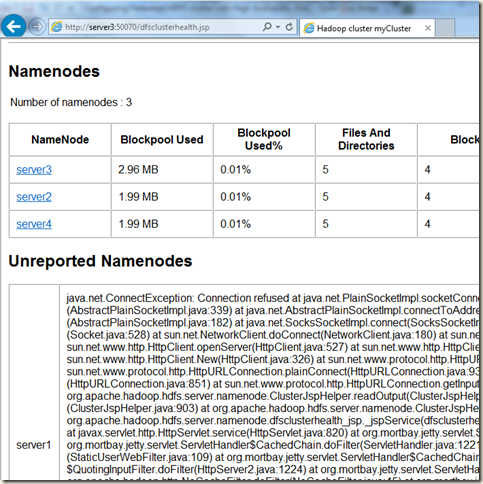

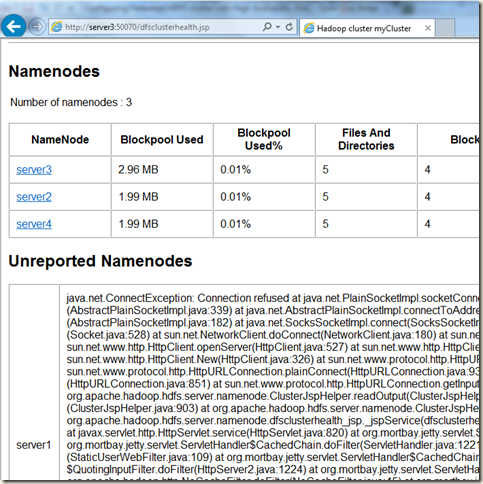

If we check the cluster health, we can see that serve1 is down.

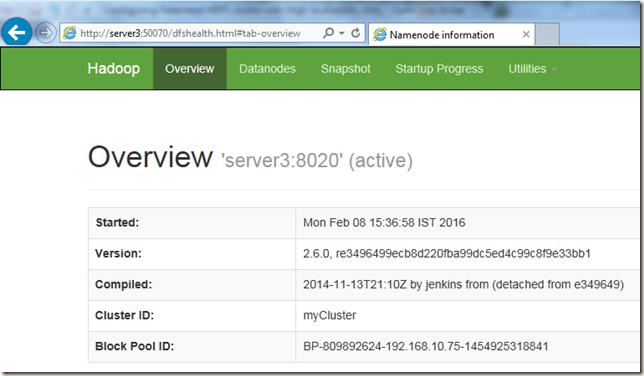

Now if we check the standby namenode of sales namespace running on server3, we can see that it has become active now:

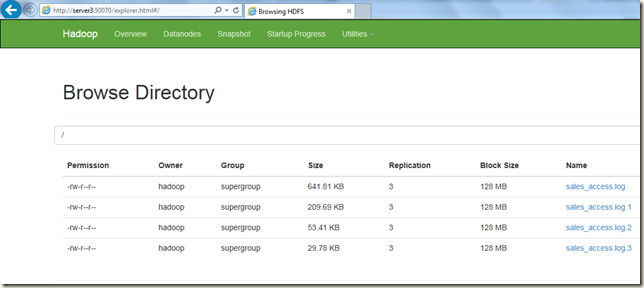

Lets check the files, we can see the files are also available, HA is working and so automatic failover.

HA is working and so automatic failover.

I am starting the namenode in server1 again.

Now if we check the status of the namenode in server1, we can see that it has become standby as expected.

One Final Note:

Once initial configurations are done, you can start the cluster in the following order:

First start the Zookeeper services:

hadoop@server2:~$ zkServer.sh start

hadoop@server3:~$ zkServer.sh start

hadoop@server4:~$ zkServer.sh start

After that start journalnodes

hadoop@server1:~$ hadoop-daemon.sh start journalnode

hadoop@server2:~$ hadoop-daemon.sh start journalnode

hadoop@server3:~$ hadoop-daemon.sh start journalnode

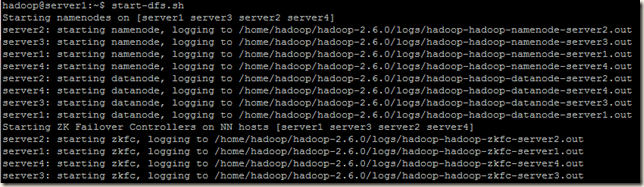

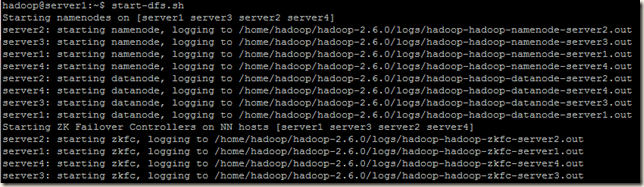

Finally all the namenodes, datanodes and ZKFailoverController processes using the start-dfs.sh script.

For demonstration of this requirement, I am going to use 4 virtual box with Ubuntu 14.04 VMs. The VMs are named as server1, server2, server3 and server4.

There are two ways we can configure HDFS High Availability, using Using the Quorum Journal Manager or using Shared Storage. Using shared storage in HA design, there is always a risk of failure of the shared storage. As I am going to use more latest version of hadoop (i.e. 2.6), so I will use Quorum Journal Manager which provides additional level of HA by having a group of JournalNodes. When any namespace modification is performed by the Active node, it durably logs a record of the modification to a majority of these JNs. So if we configure multiple journalnodes, then we can afford jornalnode failures also. For details you can read offical hadoop documents.

For configuring HA with automatic failover we also need Zookeeper. For details of Zookeeper, you can visit the link https://zookeeper.apache.org/

Placement of different Services

Namenodes:1) server1 : Will run active/standby namenode for namespace sales

2) server2: Will run active/standby namenode for namespace analytics

3) server3: Will run active/standby namenode for namespace sales

4) server4: Will run active/standby namenode for namespace analytics

Datanodes:

For this demo, I will run datanodes on all the four VMs.

Journal Nodes:

Journal nodes will run on three VMs server1, server2 and server3

Zookeeper:

Jookeeper will run on three VMs server2, server3 and server4

Zookeeper Configuration

First I am going to configure a Zookeeper three node cluster. I am not going to write details about Zookeeper and its configuration, I will only write the configs that is necessary for this document. I have downloaded and deployed Zookeeper in server2, server3 and server4 servers. I am using the default Zookeeper configuration, for our zookeeper ensemble I have added the below lines in Zookeeper configuration file:server.1=server2:2222:2223

server.2=server3:2222:2223

server.3=server4:2222:2223

Create directory for storing Zookeeper data and log files:

hadoop@server2:~$ mkdir /home/hadoop/hdfs_data/zookeeper

hadoop@server3:~$ mkdir /home/hadoop/hdfs_data/zookeeper

hadoop@server4:~$ mkdir /home/hadoop/hdfs_data/zookeeper

Create Zookeeper ID files:

hadoop@server2:~$ echo 1 > /home/hadoop/hdfs_data/zookeeper/myid

hadoop@server3:~$ echo 2 > /home/hadoop/hdfs_data/zookeeper/myid

hadoop@server4:~$ echo 3 > /home/hadoop/hdfs_data/zookeeper/myid

Start Zookeeper cluster

hadoop@server2:~$ zkServer.sh start

hadoop@server3:~$ zkServer.sh start

hadoop@server4:~$ zkServer.sh start

HDFS Configuration

core-site.xml:We are going to use ViewFS and define which paths map to which namenode. For details about ViewFS you may visit this https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/ViewFs.html link. Now the clients will load the ViewFS plugin and look for mount table information in the configuration file.

<property>

<name>fs.defaultFS</name>

<value>viewfs:///</value>

</property>

Here we are mapping a folder to a namespace

Note: In last blog post on HDFS federation http://pe-kay.blogspot.in/2016/02/change-single-namenode-setup-to.html, I mapped the path to a namenode’s URL, but as we are now into a HA configuration so I have to set the mapping to the respective nameservice.

<property>

<name>fs.viewfs.mounttable.default.link./sales</name>

<value>hdfs://sales</value>

</property>

<property>

<name>fs.viewfs.mounttable.default.link./analytics</name>

<value>hdfs://analytics</value>

</property>

We have to give a directory name in JournalNode machines where the edits and other local state used by the JournalNodes will be stored. Create this directory in all the nodes where the journalnodes will run.

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/hdfs_data/journalnode</value>

</property>

hdfs-site.xml

Note: I have included only those configurations which are necessary to configure federated HA cluster.

<!-- Below properties are added for NameNode Federation and HA -->

<!-- Nameservices for our two federated namespaces sales and analytics -->

<property>

<name>dfs.nameservices</name>

<value>sales,analytics</value>

</property>

In each nameservice we will define 2 namenodes, one will be active namenode and the other one will be standby namenode.

<!-- Unique identifiers for each NameNodes in the sales nameservice -->

<property>

<name>dfs.ha.namenodes.sales</name>

<value>sales-nn1,sales-nn2</value>

</property>

<!-- Unique identifiers for each NameNodes in the analytics nameservice -->

<property>

<name>dfs.ha.namenodes.analytics</name>

<value>analytics-nn1,analytics-nn2</value>

</property>

<!-- RPC address for each NameNode of sales namespace to listen on -->

<property>

<name>dfs.namenode.rpc-address.sales.sales-nn1</name>

<value>server1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.sales.sales-nn2</name>

<value>server3:8020</value>

</property>

<!-- RPC address for each NameNode of analytics namespace to listen on -->

<property>

<name>dfs.namenode.rpc-address.analytics.analytics-nn1</name>

<value>server2:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.analytics.analytics-nn2</name>

<value>server4:8020</value>

</property>

<!-- HTTP address for each NameNode of sales namespace to listen on -->

<property>

<name>dfs.namenode.http-address.sales.sales-nn1</name>

<value>server1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.sales.sales-nn2</name>

<value>server3:50070</value>

</property>

<!-- HTTP address for each NameNode of analytics namespace to listen on -->

<property>

<name>dfs.namenode.http-address.analytics.analytics-nn1</name>

<value>server2:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.analytics.analytics-nn2</name>

<value>server4:50070</value>

</property>

A single set of JournalNodes can provide storage for multiple federated namesystems. So I will configure the same set of JournalNodes running on server1, server2 and server3 for both the nameservices sales and analytics.

<!-- Addresses of the JournalNodes which provide the shared edits storage, written to by the Active nameNode and read by the Standby NameNode –>

Shared edit storage in JournalNodes for sales namespace.

<property>

<name>dfs.namenode.shared.edits.dir.sales</name>

<value>qjournal://server1:8485;server2:8485;server3:8485/sales</value>

</property>

Shared edit storage in JournalNodes for analytics namespace.

<property>

<name>dfs.namenode.shared.edits.dir.analytics</name>

<value>qjournal://server1:8485;server2:8485;server3:8485/analytics</value>

</property>

<!-- Configuring automatic failover -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>server2:2181,server3:2181,server4:2181</value>

</property>

<!-- Fencing method that will be used to fence the Active NameNode during a failover -->

<!-- sshfence: SSH to the Active NameNode and kill the process -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<!-- Configure the name of the Java class which will be used by the DFS Client to determine which NameNode is the current Active -->

<property>

<name>dfs.client.failover.proxy.provider.sales</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.analytics</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

For more about the configuration properties, you can visit https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/HDFSHighAvailabilityWithQJM.html

ZooKeeper Initialization

For creating required znode of sales namespace, run the below command from one of the Namenodes of sales nameservicehadoop@server1:~/.ssh$ hdfs zkfc –formatZK

For creating required znode of analytics namespace, run the below command from one of the Namenodes of analytics nameservice

hadoop@server2:~/.ssh$ hdfs zkfc –formatZK

Zookeeper znode tree before running above commands:

Zookeeper znode tree after running above commands:

Start JournalNodes

hadoop@server1:~$ hadoop-daemon.sh start journalnodehadoop@server2:~$ hadoop-daemon.sh start journalnode

hadoop@server3:~$ hadoop-daemon.sh start journalnode

Format Namenodes

While formating the namenodes, I will use the -clusterID option to provide a name for the hadoop cluster we are creating. This will enable us to provide the same clusterID for all the namenodes of my cluster.Formating the namenodes of sales nameservice:We have to run the format command (hdfs namenode -format) on one of NameNodes of sales nameservice. Our sales nameservice will be on server1 and server3. I am running the format command in server1.

hadoop@server1:~$ hdfs namenode -format -clusterID myCluster

One of the namenodes (in server1) of sales nameservice has been formated, so we should now copy over the contents of the NameNode metadata directories to the other, unformatted NameNode server3.

Start the namenode in server1 and run the hdfs namenode –bootstrapStandby command in server3.

hadoop@server1:~$ hadoop-daemon.sh start namenode

hadoop@server3:~$ hdfs namenode –bootstrapStandby

Start the namenode in server3:

hadoop@server3:~$ hadoop-daemon.sh start namenode

Formating the namenodes of analytics nameservice:We have to run the format command (hdfs namenode -format) on one of NameNodes of analytics nameservice. Our analytics nameservice will be on server2 and server4. I am running the format command in server2.

hadoop@server2:~$ hdfs namenode -format -clusterID myCluster

One of the namenodes (in server2) of analytics nameservice has been formated, so we should now copy over the contents of the NameNode metadata directories to the other, unformatted NameNode server4.

Start the namenode in server2 and run the hdfs namenode –bootstrapStandby command in server4.

hadoop@server2:~$ hadoop-daemon.sh start namenode

hadoop@server4:~$ hdfs namenode –bootstrapStandby

Start the namenode in server4:

hadoop@server4:~$ hadoop-daemon.sh start namenode

Start remaining services

Start the ZKFailoverController process (zkfs, it is a ZooKeeper client which also monitors and manages the state of the NameNode.) in all the VMs where the namenodes are running.

hadoop@server1:~$ hadoop-daemon.sh start zkfc

hadoop@server2:~$ hadoop-daemon.sh start zkfc

hadoop@server3:~$ hadoop-daemon.sh start zkfc

hadoop@server4:~$ hadoop-daemon.sh start zkfc

Start DataNodes

hadoop@server1:~$ hadoop-daemon.sh start datanode

hadoop@server2:~$ hadoop-daemon.sh start datanode

hadoop@server3:~$ hadoop-daemon.sh start datanode

hadoop@server4:~$ hadoop-daemon.sh start datanode

Checking our cluster

sales namespace:Lets check the namenodes of sales namespace

Open the URLs in web-browser http://server1:50070 and http://server3:50070

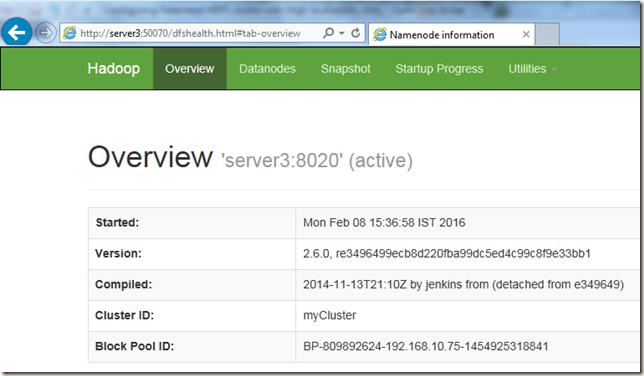

We can see that the namenode in server1 is active right now.

Namenode in server3 is standby

analytics namespace:

Open the URLs in web-browser http://server2:50070 and http://server4:50070

server2 is active now

server4 is standby

Now I will copy two files into our two namespace folders /sales and /analytics and will check if they are in correct namenode:

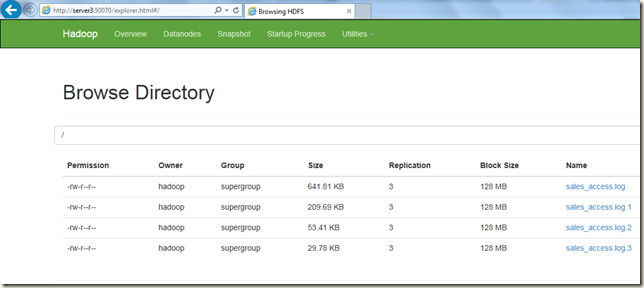

Checking in server1 (active namenode for /sales nameservice), we can see that namenode running on server1 has files only related to sales namespace.

Similarly, server2 (active namenode for /analytics nameservice), we can see that namenode running on server2 has files only related to analytics namespace.

If we try to read from a standby namenode, we will get error. In the below screenshot I tried to read from server3 (standby namenode for /sales nameservice) and got error.

Lets put few more files and check if the automatic failover is working:

I am killing the active namenode of /sales namespace running on server1

If we check the cluster health, we can see that serve1 is down.

Now if we check the standby namenode of sales namespace running on server3, we can see that it has become active now:

Lets check the files, we can see the files are also available,

I am starting the namenode in server1 again.

Now if we check the status of the namenode in server1, we can see that it has become standby as expected.

One Final Note:

Once initial configurations are done, you can start the cluster in the following order:

First start the Zookeeper services:

hadoop@server2:~$ zkServer.sh start

hadoop@server3:~$ zkServer.sh start

hadoop@server4:~$ zkServer.sh start

After that start journalnodes

hadoop@server1:~$ hadoop-daemon.sh start journalnode

hadoop@server2:~$ hadoop-daemon.sh start journalnode

hadoop@server3:~$ hadoop-daemon.sh start journalnode

Finally all the namenodes, datanodes and ZKFailoverController processes using the start-dfs.sh script.

3 comments:

Hiii...

Thanks for sharing this awesome blop post.This post is very helpful for me.

keep updating more exiting blog posts.

best big data course online

Thank you for letting me read your blog! I stumbled upon your blog by chance. Your post was really helpful and motivating. Are you color blind? Do you have trouble telling colors apart? To determine whether you are red-green color blind or not, take the Ishihara color test.

Post a Comment