The Namenode determines whether a datanode dead or alive by using heartbeats. Each DataNode sends a Heartbeat message to the NameNode every 3 seconds (default value). This heartbeat interval is controlled by the dfs.heartbeat.interval property in hdfs-site.xml file.

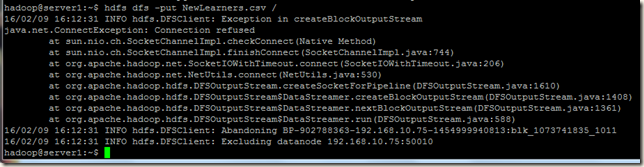

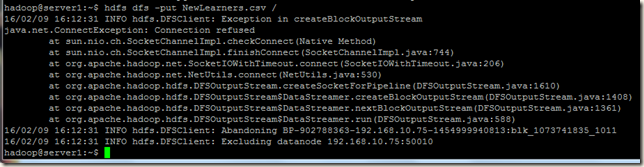

If a datanode dies, namenode waits for almost 10 mins before removing it from live nodes. Till the time datanode is marked dead, if we try to copy data in HDFS we may get error if the dead datanode is selected for storing block of the data. In the below screenshot, our datanode running on 192.168.10.75 has actually died, but namenode has not marked it dead yet. So while we copying a file to HDFS, the block write operation to the datanode 192.168.10.75 will fail and we got the below error:

The time period for determining whether a datanode is dead is calculated as:

2 * dfs.namenode.heartbeat.recheck-interval + 10 * 1000 * dfs.heartbeat.interval

The default values for dfs.namenode.heartbeat.recheck-interval is 300000 milliseconds (5 minutes) and dfs.heartbeat.interval is 3 seconds.

So if we use the default values in the above formula:

2 * 300000 + 10 * 1000 * 3 = 630000 milliseconds

Which is 10 minutes and 30 seconds. So after 10 minutes and 30 seconds, the namenode marks a datanode as dead.

For some cases, this 10 minutes and 30 seconds interval is high and we can adjust it by mainly adjusting the dfs.namenode.heartbeat.recheck-interval property. For example, suppose we want to adjust this timeout to be around 4 to 5 minutes (i.e. around 4-5 minutes interval before a datanode is marked dead). We can set the dfs.namenode.heartbeat.recheck-interval property in hdfs-site.xml file:

<property>

<name>dfs.namenode.heartbeat.recheck-interval</name>

<value>120000</value>

</property>

Now lets calculate the timeout again:

2 * 120000 + 10 * 1000 * 3 = 270000 milliseconds

This is 4 minutes and 30 seconds. So now my namenode will mark a datanode as dead in 4 mins and 30 secs.

If a datanode dies, namenode waits for almost 10 mins before removing it from live nodes. Till the time datanode is marked dead, if we try to copy data in HDFS we may get error if the dead datanode is selected for storing block of the data. In the below screenshot, our datanode running on 192.168.10.75 has actually died, but namenode has not marked it dead yet. So while we copying a file to HDFS, the block write operation to the datanode 192.168.10.75 will fail and we got the below error:

The time period for determining whether a datanode is dead is calculated as:

2 * dfs.namenode.heartbeat.recheck-interval + 10 * 1000 * dfs.heartbeat.interval

The default values for dfs.namenode.heartbeat.recheck-interval is 300000 milliseconds (5 minutes) and dfs.heartbeat.interval is 3 seconds.

So if we use the default values in the above formula:

2 * 300000 + 10 * 1000 * 3 = 630000 milliseconds

Which is 10 minutes and 30 seconds. So after 10 minutes and 30 seconds, the namenode marks a datanode as dead.

For some cases, this 10 minutes and 30 seconds interval is high and we can adjust it by mainly adjusting the dfs.namenode.heartbeat.recheck-interval property. For example, suppose we want to adjust this timeout to be around 4 to 5 minutes (i.e. around 4-5 minutes interval before a datanode is marked dead). We can set the dfs.namenode.heartbeat.recheck-interval property in hdfs-site.xml file:

<property>

<name>dfs.namenode.heartbeat.recheck-interval</name>

<value>120000</value>

</property>

Now lets calculate the timeout again:

2 * 120000 + 10 * 1000 * 3 = 270000 milliseconds

This is 4 minutes and 30 seconds. So now my namenode will mark a datanode as dead in 4 mins and 30 secs.

2 comments:

Nice post. By reading your blog, i get inspired and this provides some useful information. Thank you for posting this exclusive post for our vision.

Big Data Hadoop Training In Chennai | Big Data Hadoop Training In anna nagar | Big Data Hadoop Training In omr | Big Data Hadoop Training In porur | Big Data Hadoop Training In tambaram | Big Data Hadoop Training In velachery

Thank you for sharing such a clear and insightful piece. I truly found your ideas valuable and engaging. I really appreciate how you connected different industries and perspectives, it gave me a fresh way to think about it.

Root Canal Treatment In Madinaguda

Post a Comment