MongoDB oplog is a capped collection that records all the data change operations in the databases. As the oplog is a capped collection, so it can record database changes only for a particular period of time.

Consider the following scenario:Say our oplog can store changes for last 24 hours. Our daily backup takes place at 3AM. Suppose one user fired a drop command by mistake, and dropped a collection at 11AM. To recover the dropped collection we can restore the backup of 3AM and then we can apply the oplog and recover it till 11AM (till the drop statement).

Again suppose if our oplog size is small and it can store changes only for 4 hours. In this case, we will not be able to perform the point-in-time recovery for the above scenario. So be sure that you have a large enough oplog or you take backup of your oplog frequently (before getting overwritten).

Oplog is enabled when we start a mongodb replica set. In case of standalone mongodb instance, if we need oplog then we can start the mongod instance as master (master-slave replication) or as a single node replica set.

In this example I am going to show the recovery process in a standalone mongodb instance. Also I am going to enable oplog in this instance by starting it as a master node of master-slave replication (using master-slave replication is not recommended, and it is recommended to use replica set. As here it is just for tutorial purpose, so I am using master-slave replication).

To start MongoDB with oplog in (master-slave replication) use the --master option.

mongod --config /etc/mongod.conf --master

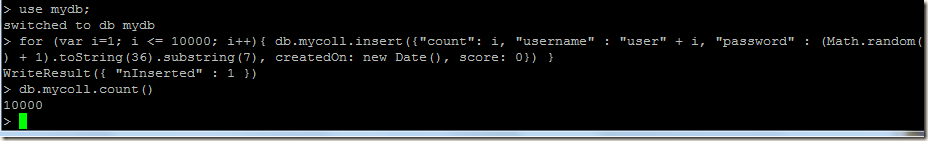

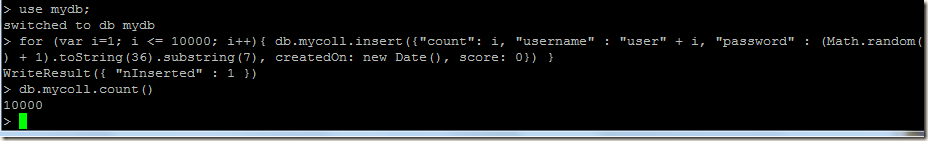

I will create a collection mycoll in the database mydb with 10000 documents.

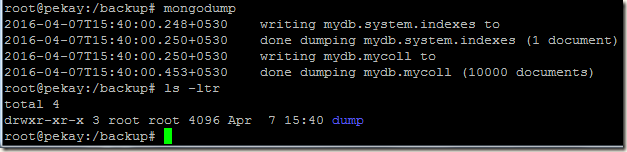

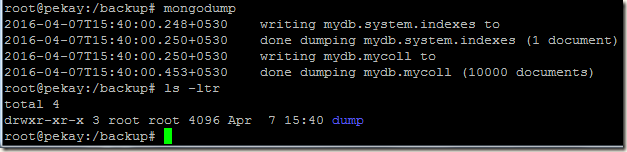

At this point we will take a backup, for backup I am going to use mongodump.

After taking backup, we will do some more changes in the database

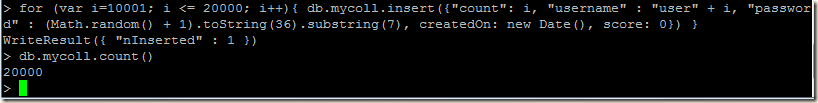

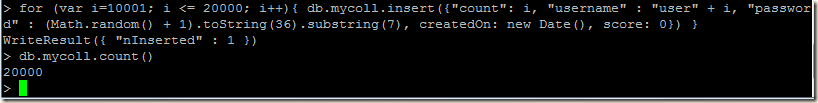

Inserting another 10000 documents:

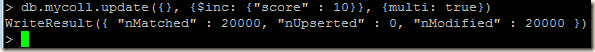

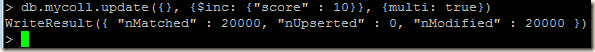

Update all the documents and increment the score of all the documents by 10:

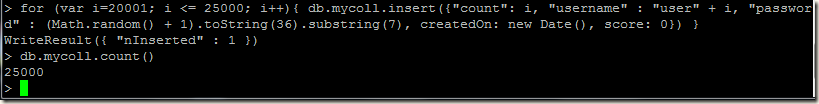

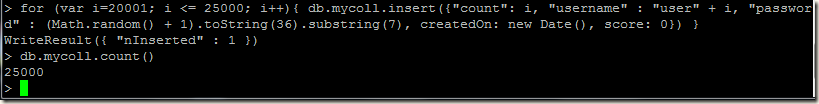

Again add another 5000 documents:

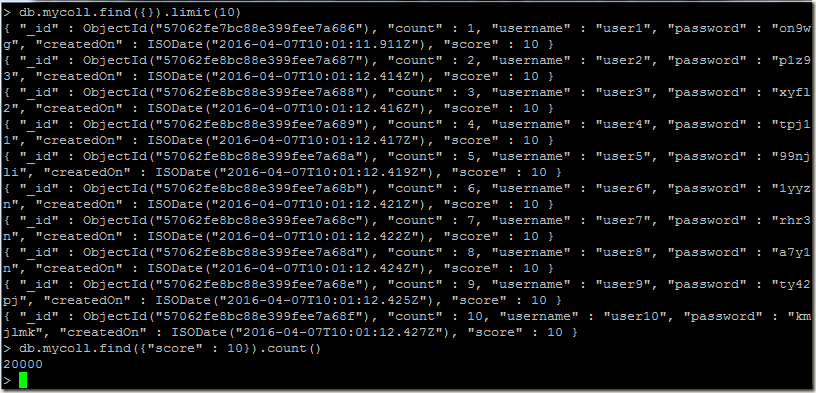

We have 25,000 documents in our mycoll collection.

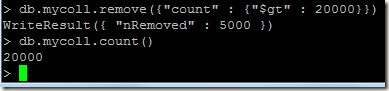

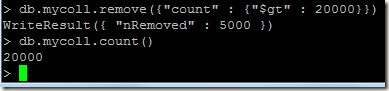

Now I will run a query that will remove 5000 documents.

Lets suppose the above remove command was fired by mistake and we want to recover the deleted documents.

We have monogdump backup, but that backup was taken when the collection had only 10000 documents. So this backup will not be sufficient to recover the deleted documents. So we are going to use the oplog, our saviour .

.

Take backup of the oplog using mongodump:MongoDB stores oplog in the local database. The collection in which the oplog is stored depends on the replication type:

1) Using master-slave type replication: oplog is stored in oplog.$main collection

2) Using replica-set: oplog is stored in oplog.rs collection

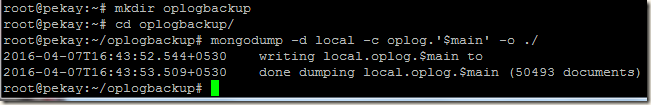

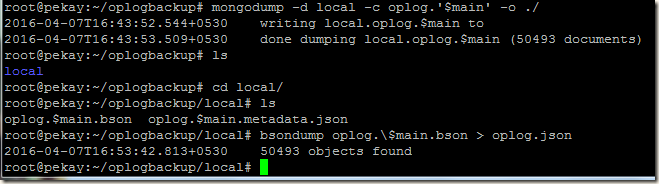

For this example we are using master-slave replication, so I am taking mongodump backup of oplog.$main collection.

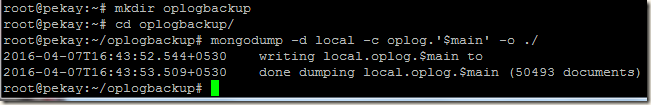

I created oplogbackup directory and in that directory I took mongodump of the oplog.$main collection.

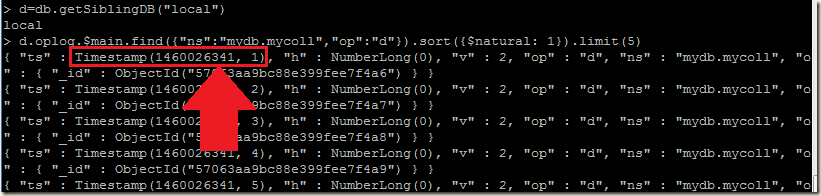

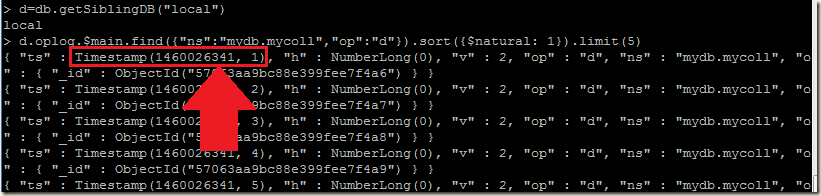

Find the recovery point:As we have our mongod running and oplog available, we can query the oplog.$main collection and find the recovery point. We executed the delete command on mydb.mycoll collection, so our query will be as follows:

> d=db.getSiblingDB("local")

> d.oplog.$main.find({"ns":"mydb.mycoll","op":"d"}).sort({$natural: 1}).limit(5)

Our aim is to find the "ts" field for first delete operation. In our case it is "ts" : Timestamp(1460026341, 1). Note this value as 1460026341:1 and it is our recovery point, we will have to use this value in mongorestore.

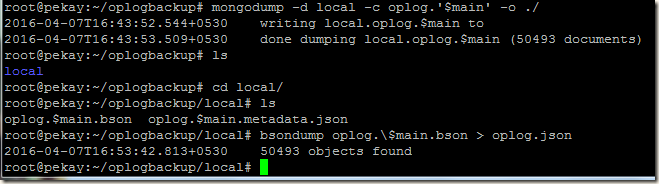

Also we can run the bsondump tool to generate json file of our oplog backup and then searching the json file for the recovery point.

Recovering Database:

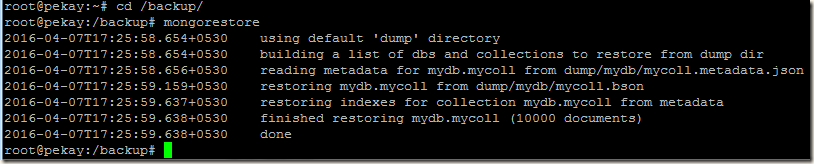

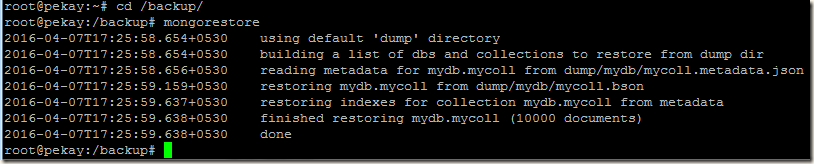

First I will restore the mongodump backup of the database. I am restoring the backup in a fresh mongod instance:

mongorestore command restored 10,000 documents of our mydb.mycoll collection. The remaining recovery will be done using the oplog backup.

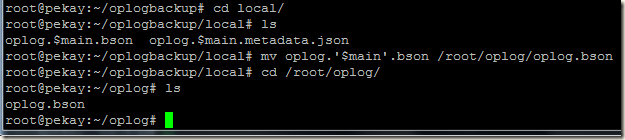

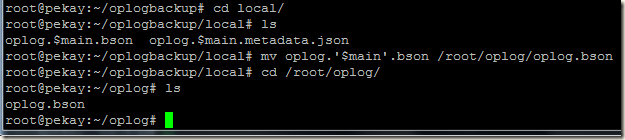

We can replay oplog using the mongorestore command’s --oplogReplay option. To replay oplog, mongorestore command requires oplog file backup to be named as oplog.bson. So I am going to move and rename our oplog backup file oplog.$main.bson into another directory as oplog.bson.

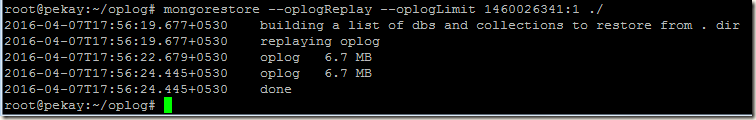

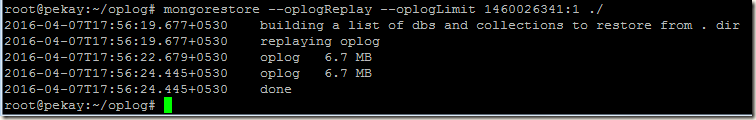

For point-in-time recovery, mongorestore has another option --oplogLimit. This option allows us to specify timestamp (in <seconds>[:ordinal] format). --oplogLimit instructs mongorestore to include oplog entries before the provided timestamp. So restore operation will run till the provided timestamp.

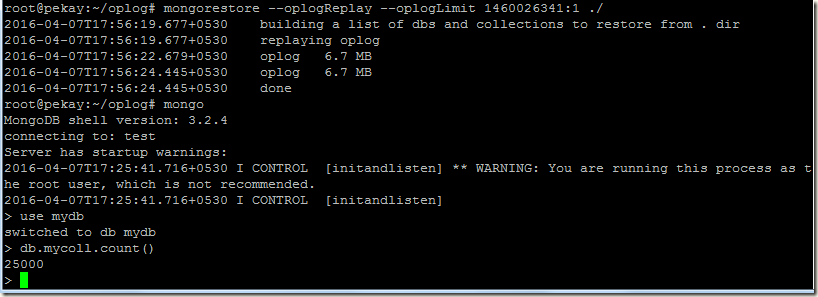

So running our oplog replay:

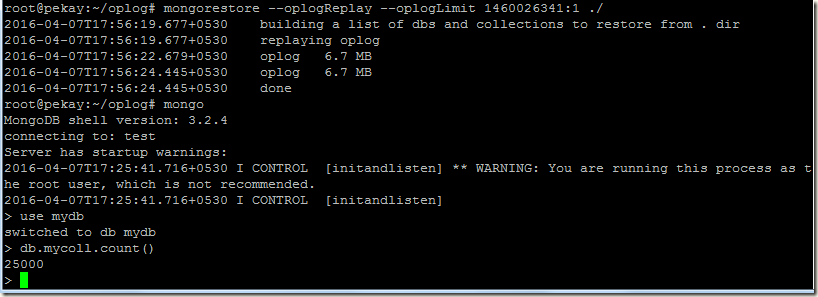

Database recovery is completed, now lets check the recovered data

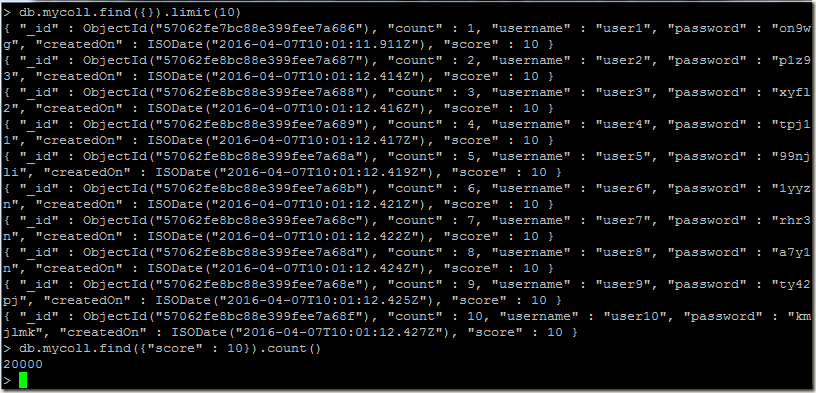

Yes, we can see all the 25,000 documents are back. So we are able to recover the 5,000 deleted documents.

Also our updated 20,000 documents with incremented score of 10, also present .

.

So our point in time recovery is successful

Consider the following scenario:Say our oplog can store changes for last 24 hours. Our daily backup takes place at 3AM. Suppose one user fired a drop command by mistake, and dropped a collection at 11AM. To recover the dropped collection we can restore the backup of 3AM and then we can apply the oplog and recover it till 11AM (till the drop statement).

Again suppose if our oplog size is small and it can store changes only for 4 hours. In this case, we will not be able to perform the point-in-time recovery for the above scenario. So be sure that you have a large enough oplog or you take backup of your oplog frequently (before getting overwritten).

Oplog is enabled when we start a mongodb replica set. In case of standalone mongodb instance, if we need oplog then we can start the mongod instance as master (master-slave replication) or as a single node replica set.

Hands On:

Note: I have used MongoDB 3.2.4 on Ubuntu 14.04 for this tutorial.In this example I am going to show the recovery process in a standalone mongodb instance. Also I am going to enable oplog in this instance by starting it as a master node of master-slave replication (using master-slave replication is not recommended, and it is recommended to use replica set. As here it is just for tutorial purpose, so I am using master-slave replication).

To start MongoDB with oplog in (master-slave replication) use the --master option.

mongod --config /etc/mongod.conf --master

I will create a collection mycoll in the database mydb with 10000 documents.

At this point we will take a backup, for backup I am going to use mongodump.

After taking backup, we will do some more changes in the database

Inserting another 10000 documents:

Update all the documents and increment the score of all the documents by 10:

Again add another 5000 documents:

We have 25,000 documents in our mycoll collection.

Now I will run a query that will remove 5000 documents.

Lets suppose the above remove command was fired by mistake and we want to recover the deleted documents.

We have monogdump backup, but that backup was taken when the collection had only 10000 documents. So this backup will not be sufficient to recover the deleted documents. So we are going to use the oplog, our saviour

Take backup of the oplog using mongodump:MongoDB stores oplog in the local database. The collection in which the oplog is stored depends on the replication type:

1) Using master-slave type replication: oplog is stored in oplog.$main collection

2) Using replica-set: oplog is stored in oplog.rs collection

For this example we are using master-slave replication, so I am taking mongodump backup of oplog.$main collection.

I created oplogbackup directory and in that directory I took mongodump of the oplog.$main collection.

Find the recovery point:As we have our mongod running and oplog available, we can query the oplog.$main collection and find the recovery point. We executed the delete command on mydb.mycoll collection, so our query will be as follows:

> d=db.getSiblingDB("local")

> d.oplog.$main.find({"ns":"mydb.mycoll","op":"d"}).sort({$natural: 1}).limit(5)

Our aim is to find the "ts" field for first delete operation. In our case it is "ts" : Timestamp(1460026341, 1). Note this value as 1460026341:1 and it is our recovery point, we will have to use this value in mongorestore.

Also we can run the bsondump tool to generate json file of our oplog backup and then searching the json file for the recovery point.

Recovering Database:

First I will restore the mongodump backup of the database. I am restoring the backup in a fresh mongod instance:

mongorestore command restored 10,000 documents of our mydb.mycoll collection. The remaining recovery will be done using the oplog backup.

We can replay oplog using the mongorestore command’s --oplogReplay option. To replay oplog, mongorestore command requires oplog file backup to be named as oplog.bson. So I am going to move and rename our oplog backup file oplog.$main.bson into another directory as oplog.bson.

For point-in-time recovery, mongorestore has another option --oplogLimit. This option allows us to specify timestamp (in <seconds>[:ordinal] format). --oplogLimit instructs mongorestore to include oplog entries before the provided timestamp. So restore operation will run till the provided timestamp.

So running our oplog replay:

Database recovery is completed, now lets check the recovered data

Yes, we can see all the 25,000 documents are back. So we are able to recover the 5,000 deleted documents.

Also our updated 20,000 documents with incremented score of 10, also present

So our point in time recovery is successful

8 comments:

good one Pranab

Nice explanation, thanks for sharing your honest opinion _--

Thank you so much, very useful

Cloud data lakeis a storage repository that stores data in its native format, and does not require a data warehouse to be stored in a predetermined format. The cloud data lake enables a company to store its data in whatever format it is in, and to whatever depth. A cloud data lake is more flexible than a cloud data warehouse, which requires a company's data to be in a predetermined format, and a cloud data lake is significantly cheaper than a cloud data warehouse.

The Amazon Web Service's (AWS)Big Data service is a cloud based tool that helps you analyze the large amounts of data that you collect from your website. The Big Data service allows you to easily integrate data from your website with datasets from other AWS services. In other words, if you have data that you collected from your website and would like to analyze it, using the Big Data service you can connect to data from other AWS services, such as Amazon Redshift or Amazon Elastic MapReduce.

You've got carried out a loud challenge upon this article. Its absolutely regulate and extremely qualitative. You've got even controlled to make it readable and smooth to make a get accord of of into. You've got a few specific writing adroitness. Thank you as a consequence lots. Syncios Data Recovery Key

This sort of booklet continuously moving and I select to go web-based mind-set content material, for that excuse happy to track down extraordinary district to numerous here in the broadcast, the composing is simply on your loving, thank you for the name. Friendship Day Quotes

Post a Comment