Writing my first blog of 2016, my first step into the exciting Hadoop World, a little late but better late than never.

In this post I will show you how to convert or migrate an existing single namenode setup to a Federated namenode setup.

I am not going write about details of namenode federation, there are tons of resources available online, please go through any of these for understanding the concepts. You may also refer this official Hadoop link https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/Federation.html

So starting my factitious story:

Our existing HDFS cluster had single namenode named masternode and three datanodes datanode1, datanode2 and datanode3. This setup was running fine and was working well for our IT department users. One fine day, manager came to the Hadoop Admin and said two more departments sales and analytics want to use the Hadoop cluster, but both the departments want their data to be managed by different namenodes. Analytics team is going to store lots of files so that should not affect the performance of the existing namenode. Our datanodes have very large amount of free storages, so we wanted to utilize those free storage space as well as wanted to share the load of namenode by adding another one. So we decided to add another namenode and keep analytics department’s files metadata in the new namenode.

So in the new setup we will have two namenodes masternode (the existing one), masternode1 (the new one) and the three datanodes datanode1, datanode2 and datanode3. The analytics team’s data will go to masternode1 and sales team’s data will be in masternode. The existing users will access their data as it is.

The sales team will store their data in /sales folder in our HDFS cluster.

The analytics team will store their data in /analytics folder in our HDFS cluster.

I am not going to write all the installation and setup parts here, I will write just those steps that are necessary to explain this topic.

Step 1:

Install hadoop on the new namenode masternode1.

Step 2:

Edit the hdfs-site.xml file in masternode and add settings related to HDFS federation:

We are adding two namespaces: sales and analytics. The three datanodes will store blocks for both the namespaces.

<property>

<name>dfs.nameservices</name>

<value>sales,analytics</value>

</property>

Bind properties for our existing namenode masternode

<property>

<name>dfs.namenode.rpc-address.sales</name>

<value>masternode:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.sales</name>

<value>masternode:50070</value>

</property>

Bind properties for the new namenode masternode1

<property>

<name>dfs.namenode.rpc-address.analytics</name>

<value>masternode1:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.analytics</name>

<value>masternode1:50070</value>

</property>

Step 3:

We are going to use ViewFS and define which paths map to which namenode. For details about ViewFS you may visit this https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-hdfs/ViewFs.html link.We are going to edit the existing core-site.xml file:

Now the clients will load the ViewFS plugin and look for mount table information in the configuration file.

<property>

<name>fs.defaultFS</name>

<value>viewfs:///</value>

</property>

Map /sales folder to masternode

<property>

<name>fs.viewfs.mounttable.default.link./sales</name>

<value>hdfs://masternode:8020/sales</value>

</property>

Map /analytics folder to masternode1

<property>

<name>fs.viewfs.mounttable.default.link./analytics</name>

<value>hdfs://masternode1:8020/analytics</value>

</property>

Restart the exisitng namenode masternode to reflect the changes.

Step 4:

Copy the new hdfs-site.xml and core-site.xml file to masternode1, datanode1, datanode2 and datanode3.Step 5:

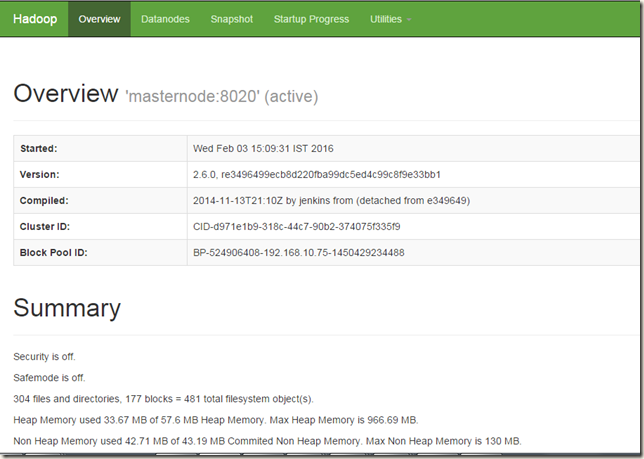

As we are already using the namenode masternode and its clusterID was already generated (some cryptic id CID-d971e1b9-318c-44c7-90b2-374075f335f9 generated automatically when we formated the existing namenode masternode). I searched a lot for changing the clusterID but most of the resources said that I have to format the namenode to generate the new clusterID of my choice, which was not possible for our case as it will destroy the exsting namenode data.So I copied the exisitng clusterID of masternode and going to use the same clusterID in the new namenode masternode1 so that it will be the part of the same cluster.

Step 6:

Format the new namenode masternode1, give the same clusterID as masternodehadoop@masternode1:~$ hdfs namenode -format -clusterID CID-d971e1b9-318c-44c7-90b2-374075f335f9

Start the new namenode masternode1

Step 7:

Reload the configuration files of all the three datanodes.hadoop@datanode1:~$ hdfs dfsadmin -refreshNamenodes datanode1:50020

hadoop@datanode2:~$ hdfs dfsadmin -refreshNamenodes datanode2:50020

hadoop@datanode3:~$ hdfs dfsadmin -refreshNamenodes datanode3:50020

Step 8:

Create the folders for /sales and /analytics mapping$ hdfs dfs -mkdir hdfs://masternode:8020/sales

$ hdfs dfs -mkdir hdfs://masternode1:8020/analytics

All done, for testing I will copy two files in the two directories that we setup:

$ hdfs dfs -put Centers2015.csv /analytics

$ hdfs dfs -put zookeeper-3.4.6.tar.gz /sales

Each namenode has two URLs:

1) http://masternode:50070/dfshealth.html : View of the namespace managed by this namenode.

2) http://masternode:50070/dfsclusterhealth.jsp : Aggregated cluster view which includes all the namenodes of the cluster.

Using the above links, we can check whether our files are in correct namespace

1 comment:

Hi Pranab,

Good Article. How to federate an existing Hadoop cluster with HA enabled namenode? I have a cluster with huge data, I don't want to lose any data. How to make it possible?

Post a Comment